What OpenFlow is (and more importantly, what it’s not)

Posted: June 5, 2011 Filed under: OpenFlow, SDN 20 CommentsThere has been a lot of chatter on OpenFlow lately. Unsurprisingly, the vast majority is unguided hype and clueless naysaying. However, there have also been some very thoughtful blogs which, in my opinion, focus on some of the important issues without elevating it to something it isn’t.

Here are some of my favorites:

- Notes on OpenFlow by James Liao

- OpenFlow is Like IPv6 by Ivan Pepelnjak

- On data center scale, OpenFlow, and SDN by Brad Hedlund

I would suggesting reading all three if you haven’t already.

In any case, the goal of this post is to provide some background on OpenFlow which seems to be missing outside of the crowd in which it was created and has been used.

Quickly, what is OpenFlow? OpenFlow is a fairly simple protocol for remote communication with a switch datapath. We created it because existing protocols were either too complex or too difficult to use for efficiently managing remote state. The initial draft of OpenFlow had support for reading and writing to the switch forwarding tables, soft forwarding state, asynchronous events (new packets arriving, flow timeouts), and access to switch counters. Later versions added barriers to allow synchronous operations, cookies for more robust state management, support for multiple forwarding tables, and a bunch of other stuff I can’t remember off hand.

Initially, OpenFlow attempted to define what a switch datpath should look like (first as one large TCAM, and then multiple in succession). However, in practice this is often ignored as hardware platforms tend to be irreconcilably different (there isn’t an obvious canonical forwarding pipeline common to the chipsets I’m familiar with).

And just as quickly, what isn’t OpenFlow? It isn’t new or novel, there have been many similar protocols proposed. It isn’t particularly well designed (the initial designers and implementors were more interested in building systems than designing a protocol), although it is continually being improved. And it isn’t a replacement for SNMP or NetConf, as it is myopically focussed on the forwarding path.

So, it’s reasonable to ask, why then is OpenFlow needed? The short answer is, it isn’t. In fact, focussing on OpenFlow misses the point of the rational behind its creation. What *is* needed are standardized and supported APIs for controlling switch forwarding state.

Why is this?

Consider system design in traditional networking versus modern distributed software development.

Traditional Networking (Protocol design):

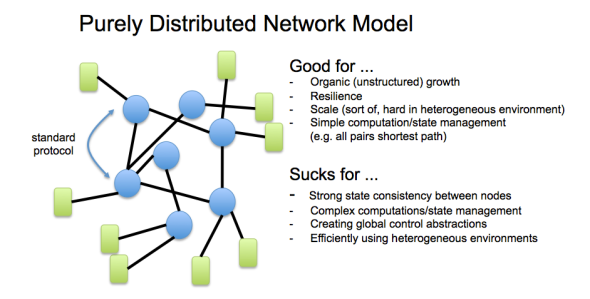

Adding functionality to a network generally reduces to creating a new protocol. This is a fairly low-level endeavor often consisting of worrying about the wire format and state distribution algorithm (e.g. flooding). In almost all cases, protocols assume a purely distributed model which means it should handle high rates of churn (nodes coming and going), heterogenous node resources (e.g. cpu), and almost certainly means the state across nodes is eventually consistent (often the bane of correctness).

In addition, to follow the socially acceptable route, somewhere during this process you bring your new protocol to a standards body stuffed by the vendors and spend years (likely) arguing with a bunch of lifers on exactly what the spec should look like. But I’ll ignore that for this discussion.

So, what sucks about this? Many things. First, the distribution model is fixed. It’s *much* easier to build a system where you can dictate the distribution model (e.g. a tightly coupled cluster of servers). It involves a low-level fetish with on-the-wire data formats, and there is no standard way to implement your protocol on a given vendors platform (and even if you could, it isn’t clear how you would handle conflicts with the other mechanisms managing switch state on the box).

Developing Distributed Software:

Now, lets consider how this compares to building a modern distributed system. Say, for example, you wanted to build a system which abstracts a large datacenter consisting of hundreds of switches and tens of thousands of access ports as a single logical switch to the administrator. The administrator should be able to do everything they

can do on a single switch, allocate subnets, configure ACLs and QoS policy, etc. Finally, assume all of the switches supported something like OpenFlow.

The problem then reduces to building a software system that manages the global network state. While it may be possible to implement everything on a single server, for reliability (and probably scale) it’s more likely you’d want to implement this as a tightly coupled cluster of servers. No problem, we know how to do that, there are tons of great tools available for building scale-out distributed systems.

So why is building a tightly coupled distributed system preferable to designing a new protocol (or more likely, set of protocols)?

- You have fine grained control of the distribution mechanism (you don’t have to do something crude like send all state to all nodes)

- You have fine grained control of the consistency model. You can use existing (high-level) software for state distribution and coordination (for example, ZooKeeper and Cassandra)

- You don’t have to worry about low-level data formatting issues. Distributed data stores and packages like thrift and protocol buffers handle that for you.

- You (hopefully) reduce the debugging problem to a tightly coupled cluster of nodes.

And what might you lose?

- Scale? Seeing that systems of similar design are used today to orchestrate hundreds of thousands of servers and petabytes of data, if built right, scale should not be an issue. There are OpenFlow-like solutions deployed today that have been tested to tens of thousands of ports.

- Multi-vendor support? If the switches support a standard like OpenFlow (and can forward Ethernet) then you should be able to use any vendors’ switch.

- However, you most likely will not have interoperability at the controller level (unless a standardized software platform was introduced).

It is important to note that in both approaches (traditional networking and building a modern distributed system), the state being managed is the same. It is the forwarding and configuration state of the switching chips network wide. It’s only the method of it’s management that has changed.

So where does OpenFlow fit in all this? It’s really a very minor, unimportant, easy to replace, mechanism in a broader development paradigm. Whether it is OpenFlow, or a simple RPC schema on protocol buffers/thrift/json/etc. just doesn’t matter. What does matter is that the protocol has very clear data consistency semantics so that it can be used to build a robust, remote state management system.

Do I believe that building networks in this manner is a net win? I really do. It may not be suitable for all deployment environments, but for those building networks as systems, it clearly has value.

I realize that the following is a tired example. But it is nevertheless true. Companies like Google, Yahoo, Amazon, and Facebook have changed how we think about infrastructure through kick-ass innovation in compute, storage and databases. The algorithm is simple: bad-ass developers + infrastructure = competitive advantage + general awesomeness.

However, lacking a consistent API across vendors and devices, the network has remained, in contrast, largely untouched. This is what will change.

Blah, blah, blah, Is it actually real? There are a number of production networks outside of research and academia running OpenFlow (or similar mechanism) today. Generally these are built using whitebox hardware since the vendor supported OpenFlow supply chain is extremely immature. Clearly it is very early days and these deployments are limited to the secretive, thrill-seeking fringe. However, the black art is escaping the cloister and I suspect we’ll hear much more about these and new deployments in the near future.

What does this mean to the hardware ecosystem? Certainly less than the tech pundits would like to claim.

Minimally it will enable innovation for those who care, namely those who take pride in infrastructure innovation, and the many startups looking to offer products built on this model.

Maximally? Who the hell knows. Is there going to be an industry cataclysm in which the last companies standing are Broadcom, an ODM (Quanta), an OEM (Dell) and a software company to string them all together? No, of course not. But that doesn’t mean we won’t see some serious shifts in hegemony in the market over the next 5-10 years. And my guess is that today’s incumbents are going to have a hell of a time holding on. Why? for the following two reasons:

- The organizational white blood cells are too near sighted (or too stupid) to allow internal efforts build the correct systems. For example, I’m sure “overlay” is a four letter word in Cisco because it robs hardware of value. This addiction to hardware development cycle and margins is a critical hindrance.So while these companies potter around with their 4-7 year ASIC design cycles, others are going to maximize what goes in software and start innovating like Lady Gaga with a sewing machine.

- The traditional vendors, to my knowledge, just don’t have the teams to build this type of software. At least not today. This can be bought or built, but it will take time. And it’s not at all clear that the whiteblood cells will let that happen.

So there you have it. OpenFlow is a small piece to a broader puzzle. It has warts, but with community involvement is getting improved, and much more importantly, it is being used.

This is a fun and insightful reading. It shows the value of OpenFlow without pushing an unrealistic hype. Nice.

Thanks James. I hear we bought you guys .. (http://etherealmind.com/vmware-openflow-failed-networking-plans/). At least the commute is good … 😉

I like the view of your conference rooms.

Sorry about that, got something confused there.

Oh, no problem at all. Perhaps this came from Pronto’s acquisition of Pica8

See, I knew there was something. 🙂

Martin,

all good points, though one thing you should consider per your last point on vendors, is that historically in networking, folks who provided the “controller” have also provided the “data path” and vice versa (regardless of the fact that everything was “standard and open”. Now… we live in interesting times, so the future could be different..but something to think about.

Heya Saar,

Thanks for the comment, and definitely understood. I honestly have a hard time seeing a future in which a third party controller vendor can successfully manage a collection of switches from other vendors (without a tight partnership with all of the vendors). Managing remote state is just too tricky an engineering proposition for that.

However, I could see a commodity hardware vendor working with a controller company. Or an upstart networking company building both. Or the customer of the switches building their own software (as is done in some remote instances today)

These are certainly possibilities, though they will likely be more economics/GTM related than innovation related. Everyone has to bring something to the table, and then they have stay fed. Having said that, I think there is some confusion between a general purpose networks (whatever that is..) and DC “Fabrics”. Fabrics, by their nature should require less diversity of functionality , while at the same time behave more like “systems”( than more generic networks), these attributes could simplify the task of building these “system” from “external” parts But in any case, tight cohesion between all aspects of the “system” will be important even if you do manage everything via “open” protocols.

So nice to see such a fantastic and well-balanced summary of where OpenFlow fits into the bigger scheme of things.

[…] https://networkheresy.wordpress.com/2011/06/05/what-openflow-is-and-more-importantly-what-its-not/ Share this via: […]

Great post.

OpenFlow is in danger of being over-hyped to death (it can’t possibly live up to some of the hype out there) and bringing it into perspective is a service to us all.

Thanks Michael 🙂

Congrats Martin for the great post!

Your post is very much needed by the networking community — in the broadest sense of the work, from academia to industry including media and press! There have been many articles in the networking / IT press that are either misleading or miss some important point about OpenFlow. But also in more technical fora like the e2e list, the picture of OpenFlow is not clear for everyone (cf. http://www.postel.org/pipermail/end2end-interest/2011-April/008182.html). To me, one crucial point you are demystifying is letting the system developer choose the distribution model and not having it fixed in the architecture. Another fundamental point that is commonly misunderstood is the reactive vs. proactive modes of operation that can be adopted with OpenFlow.

The only point I am “missing” in your post (it is there but hidden) and that you eloquently raised in the e2e discussion list is the newly introduced trade-off: scalability and resilience for a simplified programming mode!

Cheers,

Christian

Thanks Christian. You’re right, I should have spoken more directly to the programming model. That is, for some deployment environments in which HA and scale aren’t critical (e.g. home or small LAN) the system developer can choose to use a much simplified programming model, probably with a commensurately larger feature set.

Hello

Nice article. However I have to admit that at the end of it I was not any more clear on where the value/ innovation is being added (perhaps I need to take more time and understand OpenFlow in more detail).

The only actual application that was discussed here was that of a very large logical router/ switch that happens to run conventional protocols (OSPF etc) on the external interfaces and allows for “innovation within the innards”. However to the end user this hasn’t resulted in any new protocol or capability manifesting itself other than scale and as it turns out existing vendors are already developing large scalable router/ switch platforms using “tightly coupled distributed systems”. If anything, it will actually be problematic for the end user to try and mix and match combinations of switches and get them all running in some federation managed by this controller.

So could you help me understand what is the new protocol/ innovation being delivered to the end user other than perhaps scalability (which can also be achieved via other means).

Thanks in advance!

OpenFlow itself doesn’t deliver anything other than a standard interface to a switch. Its value is that it is a standard and therefore should work across multiple vendors. For that reason, I often liken it to USB.

You might ask, “what is the value of a standard programmatic interface?”. Well, it allows those who want to, to develop their own software stacks for networks. And in particular use high-level distributed systems to do so rather than driving a proprietary API, CLI, or having to do deal with a loosely coupled distribution model. I’ve worked closely with service providers, telcos, government agencies, and university research groups all of whom wanted to develop their own stuff (and have!).

Remember, the end user of something like OpenFlow at this very early stage is someone who wants to build something themselves. Maybe to use internally (which is often the case) or maybe to resell.

You might then ask, “well what can they do that you can’t do with traditional gear”. Trivially, they can build what they want the way they want it and run it on the switches they want. I’ve seen BGP routers built on merchant silicon, I’ve seen highly proprietary security systems that integrate deeply with proprietary environments, I’ve seen networks which expose global TE abstractions that are driven by fairly sophisticated constraint solvers. And all of these have run production traffic in production networks.

Are all these things new and novel? I don’t know, maybe, maybe not. But I think that’s largely besides the point. OpenFlow is about providing system builders with tools to build stuff, and hopefully doing it in a way that is open. Innovation will come from the builders, not OpenFlow. Could they also innovate on a traditional platform? Sure! But I’d wager it would be more difficult, vendor specific, and likely more costly.

Ok, fair enough. Of course someone who wants to build something themselves can simply pick up one of the many open source routing stacks, combine that with merchant silicon and build exactly what they want right ?

Also, when you say “what is the value of a standard programmatic interface” what layer are you referring to ? Is it the north side interface of the controller or the south side of the controller or both ?

Picking up an open source routing stack doesn’t solve the same problem, right? I want to drive hardware, not build a standard router. A more relevant action would be to sign an NDA with Broadcom, Marvell, Fulcrum, or whomever and develop on top of their SDK to drive the chip directly. In fact, many companies I have worked with have done this.

However, there are limitations to this approach. It is chip specific (though chip independent libraries are being developed which is nice), it doesn’t provide you access to branded switches (like OpenFlow does), you couple the distribution model with the physical build-out, and you generally have to build in very limited environment. Some system builders (myself included) would much rather build on a tightly-coupled server cluster, and build the logic using high-level distributed system practices and libraries. This brings the development of your network infrastructure inline with that of compute, storage, database, etc.

I’m not claiming you can do anything new that you couldn’t do on top of the switch vendor SDK. However, I would claim that the distribution model is simpler, and the software development model is much richer.

I’m referring to the interface to the switch, not the controller.

Well said!